Computer Generated Music

Overview

Music is a fundamental part of peoples’ lives and can be used in almost any situation, whether it be listening to a relaxing song while studying, creating a backdrop for a video game, or just to provide entertainment on a long drive. This work focuses on the development of automatic music generation by using an artificial intelligence algorithm to learn common characteristics and patterns in music to create similar, but unique, variations on inputted training songs. The initial work focuses on study music for its simpler musical structure, large collection of existing art, and utility for University students.

Finding the Data

Initially, we thought that we could download study music found on YouTube for the training data. Unfortunately, our algorithm only takes in MIDI files, and there isn't a good way to convert from mp3 files to MIDI files, which meant that we needed to look elsewhere. Eventually, we turned to songs from Minecraft, since most of the songs have a peaceful quality to them and could be relaxing to study to. The final training songs can be found by clicking the link below.

Training Songs (found on Musescore)

Once we had our songs picked out, we needed to go through the downloaded MIDI file and split up each song into an individual file. This way, our algorithm could know which notes belonged to which song. Once this was done, we went through the resulting files and removed songs that were too short or that didn't sound relaxing.

Training the Model

Because this algorithm takes a long time to train, we ended up using the University of Illinois Campus Cluster to run our code. Our specific queue had 224 total CPU cores and 56 total GPU cores, enabling us to speed up our runtime. Furthermore, this helped us because we no longer had to run our code on a local machine.

The algorithm to train the model is as follows:

1). First, we read in all of the training MIDI files. We go through each note of every song, extracting key data, such as the pitch, the volume, the duration, the tempo at that note, and the distance between that note and the note that was played before it.

2). Next, we take the norm of each characteristic. We store the norm itself and the normed note data so that we can eventually decipher the model's output.

3). Once that is complete, we prepare the input and output sequences for the model. Each input sequence consists of normed characteristics for the current N notes, while each output sequence is the normed note characteristics of the note that followed the input sequence. We store each input and output sequence in a list.

4). Finally, we create the model architecture and train the model on the prepared sequences. The model outputs its current weights to a file so that we can restart training as needed, and so that we can load the model when we want to generate a new song. Even with the speed up that comes from the Campus Cluster, training to 30,000 epochs took about a week of near constant training.

Generating New Songs

Once we have the weights from the trained model, we can use those weights and the same training songs to generate new music.

The algorithm to generate a new song is as follows:

1). Again, we load all of the training MIDI files, extract key characteristics, and calculate the norms.

2). Next, we load the model, using the MIDI file data and the weights from the training step.

3). Once we have our model created, it's time to start generating. We pick a random starting sequence from our input sequences, and ask the model what note it would output given that sequence. We add this new note to our generated song's notes. We also add this new note to our current sequence and remove the sequence's first note, such that the size of the sequence always remains the same. We repeat this process to generate as many notes as we need.

4). After all of the notes are generated, we create the new MIDI file. We use the norms calculated in step 1 to get back the note's characteristics, and convert those characteristics to a note. We repeat this for all of our generated notes, and then save the resulting file.

5). Finally, we load our new song into GarageBand to do some final polishing. We use GarageBand to quantize the song, stretch out the note length, change the tempo, and pick a new instrument.

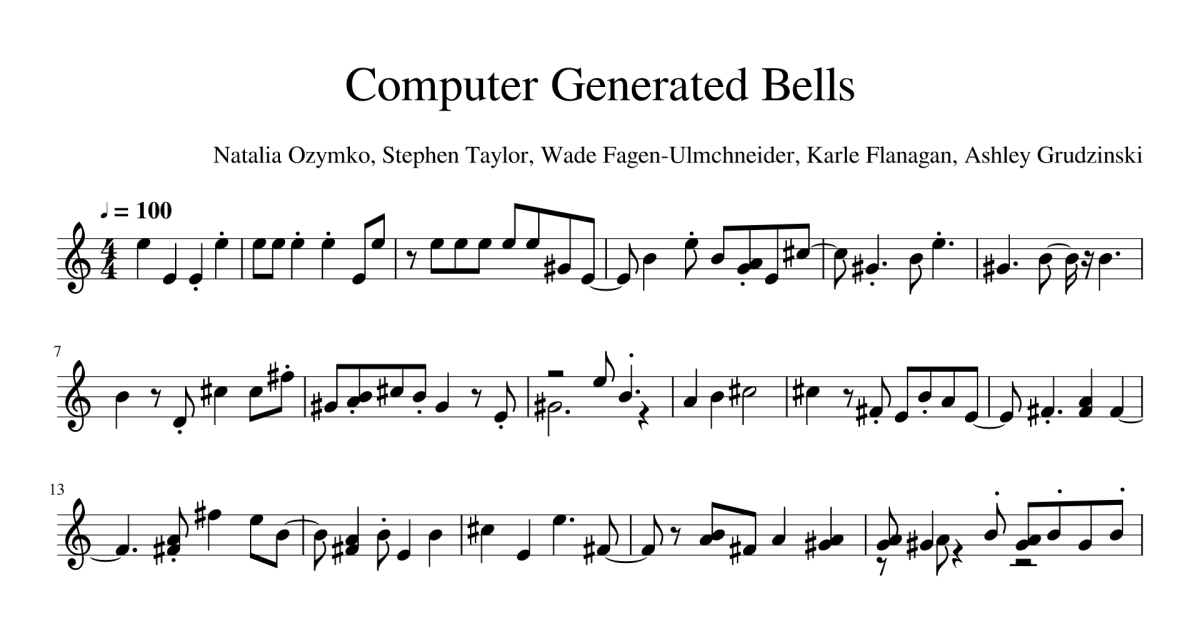

Releasing Our Work

By the end, we decided to release one hour-long song each day for five days. The final songs can be found on both YouTube and SoundCloud. The links can be found down below, we hope you enjoy! 😊